Most agencies treat AI as a science experiment. We treat it as engineering. With 100+ successful projects under our belt and a team of 30+ dedicated specialists, we’ve moved past the "what if" phase. We know what works in production and what breaks under pressure. Whether you are a startup needing a quick prototype or an enterprise modernizing a legacy system, we bring a practical, engineering-first mindset to AI.

Our AI Development Services at TwinCore

RAG (Retrieval-Augmented Generation)

Agentic Workflows

Fine-Tuning

Key Benefits of Our Custom AI Development

Custom AI Solutions We Build

Liveness Detection

Edge Deployment

Performance Metrics

Ready to build something real?

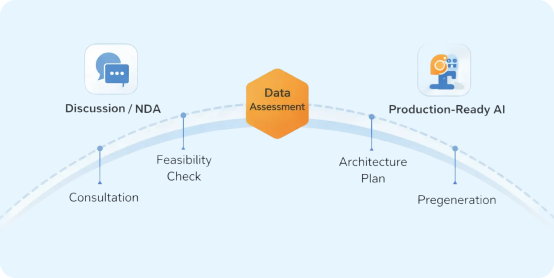

Stop reading about AI and start using it. Contact us today for a consultation. We’ll sign an NDA, look at your data, and give you an honest assessment of what’s possible.

Our Core Technical Competencies

Architecture

The Stack

Models We Deploy

Application

Industries We Serve

Logistics & Transportation

We build the systems that solve the "Last Mile" problem. Our engineering team implements Vehicle Routing Problem (VRP) solvers that factor in traffic windows, fuel costs, and driver shifts to cut deadhead miles. Beyond routing, we deploy predictive maintenance models using IoT sensor data to analyze vibration and engine load, identifying potential component failures weeks before a truck breaks down on the highway.

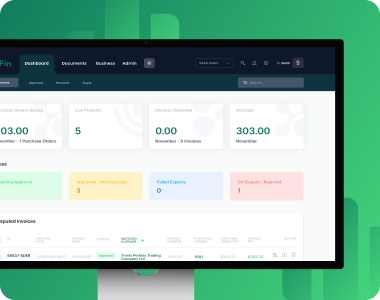

Fintech & Banking

In finance, a model is useless if it is slow or insecure, so we focus exclusively on low-latency architectures. We deploy anomaly detection engines that score transactions in under 200 milliseconds, blocking suspicious activity without creating friction for legitimate users. To support lending, we build credit risk models using gradient boosting on alternative data sources, ensuring every decision is backed by Explainable AI (XAI) protocols to satisfy strict regulatory audits.

Healthcare & MedTech

We handle sensitive patient data with strict HIPAA-compliant isolation. Our work includes training computer vision models on DICOM data to assist radiologists in spotting anomalies with high precision, as well as building NLP systems that automate patient triage. By automating the backend, we allow medical providers to focus on care rather than administrative overhead.

E-Commerce & Retail

We move beyond generic "people also bought" widgets to build engines that drive Gross Merchandise Value (GMV). We engineer collaborative filtering systems that understand deep user intent and taste profiles, coupled with dynamic pricing algorithms that monitor competitor data to adjust margins in real-time. We also implement visual search capabilities, allowing users to upload photos to find similar products in your catalog.

Cloud Infrastructure & Deployment

We are cloud-agnostic but opinionated. We architect secure, scalable environments that optimize for cost and latency. We deploy where your data already lives to minimize egress fees and security risks.

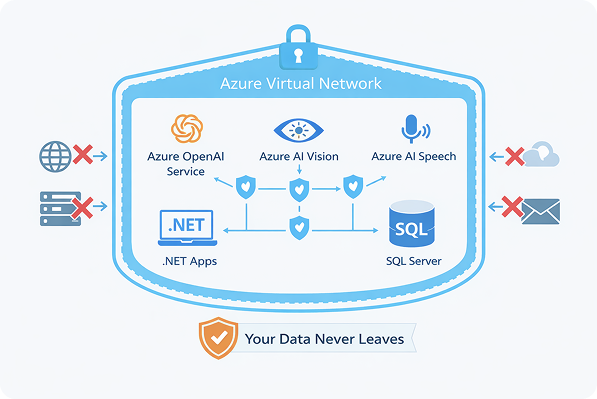

The Microsoft Azure Ecosystem

For enterprises already entrenched in the Microsoft stack, Azure is the logical choice. We specialize in deploying Azure OpenAI Service, giving you access to GPT-4o and DALL-E 3 with the critical difference that it runs inside your private, compliant virtual network. We ensure your data is never used to train public models.

Beyond the LLMs, we use Azure AI Vision for high-throughput optical character recognition (OCR) and object detection that integrates natively with Azure Storage. For voice interfaces, we implement Azure AI Speech to build conversational apps that handle real-time translation and transcription, seamlessly connecting these services to your existing .NET and SQL Server infrastructure.

The AWS (Amazon Web Services) Stack

When building on AWS, we use Amazon Bedrock to provide flexibility. Bedrock allows us to swap between foundational models (like Claude, Llama, or Titan) via a single API. This way, you aren't locked into one AI vendor.

For conversational interfaces, we build using Amazon Lex, utilizing the same deep learning engine that powers Alexa to create sophisticated, context-aware chatbots. We pair this with Amazon Polly for lifelike neural text-to-speech generation. Additionally, we implement Amazon Q for enterprise search. With its help, we create internal knowledge engines that allow your employees to query unstructured company data securely and instantly.

Why Us

Comparison Table (SaaS vs Custom vs TwinCore)

| Feature | Generic SaaS Wrapper | In-House Hiring | TwinCore Custom Dev |

|---|---|---|---|

| Data Privacy | Low (Data often trains their models) | High | High (Private Cloud/On-Prem) |

| Cost Structure | Per-user / Per-token (Scales up) | High Fixed Salaries ($200k+/yr) | Project-Based / One-time |

| Ownership | You rent the software | You own it | You own the source code |

| Time to Launch | Instant | 6-12 Months | 4-8 Months |

| Customization | Zero (One size fits all) | High | Exact Fit |

Delivery Process

We are not a "fire and forget" vendor. We are your technical partners:

100+ Projects. Zero Vendor Lock-in.

What our clients say about us

Related Topics

Frequently Asked Questions

Absolutely not. This is the biggest risk in AI, and we architect specifically to prevent it:

- For External Models. If we use Azure OpenAI, we configure it with "Zero Data Retention" policies. Microsoft does not use enterprise data to train their base models.

- For Local Models. If you require total isolation, we deploy open-source models (like Llama 3 or Mistral) directly on your own private cloud or on-premise servers. Your data never leaves your firewall. We sign strict NDAs before seeing a single row of your database.

Yes. This is our specific specialty. While most data science happens in Python, we know that the business world runs on .NET and Java. We build secure bridges (using gRPC, REST APIs, or ML.NET) that allow your legacy C# applications to consume modern AI services seamlessly.

Yes. You own everything. Unlike SaaS platforms where you "rent" the intelligence, with TwinCore, you are paying for custom engineering. Upon delivery, you receive:

- The full source code;

- The model weights and training scripts;

- The containerized environment (Docker/Kubernetes config).

We do not use vendor lock-in. You can take our code and have your internal team maintain it tomorrow.

We use engineering guardrails:

- RAG Architecture. We force the AI to cite sources from your own uploaded documents. If the answer isn't in your documents, the system is programmed to say, "I don't know", rather than making something up.

- Deterministic Layers. For critical logic (like pricing or safety checks), we bypass the LLM and use standard code or classical regression models that are 100% predictable.

Custom AI is not a $50/month subscription, but it shouldn't cost millions. Typical AI project timeline varies:

- Proof of Concept (POC). We usually start with a fixed-price pilot (typically 2–4 weeks) to validate feasibility. This keeps your risk low.

- Production MVP. A full deployment usually ranges from 3 to 6 months depending on data complexity and integration needs.

We provide a detailed technical roadmap and budget estimate after our initial discovery call.

Not necessarily. We build systems designed for "Software Engineers". We set up MLOps pipelines that automate retraining and monitoring. However, if you want us to stay on for maintenance, we offer retainer packages to handle model updates, dependency patching, and performance optimization.

Beware of anyone who promises 100% accuracy in AI. They are lying. We define success metrics before we write code. We establish a baseline (e.g., "current human error rate is 15%") and aim to beat it significantly (e.g., "model error rate of 5%"). If the technology isn't mature enough to solve your specific problem with high confidence, we will tell you in the Discovery phase.

LinkedIn

LinkedIn

Twitter

Twitter

Facebook

Facebook

Youtube

Youtube